Key Points

- Anthropic Data Policy Update, 5 Urgent Changes to Know

- Opt-out deadline: September 28, 2025

- Claude chats now defaults to training AI

- Data is stored for 5 years if you don’t opt out

- Business users remain unaffected by the new policy

Anthropic has changed the rules, and it’s a big deal for anyone using its Claude AI products. Starting now, Claude Free, Pro, and Max users, including those using Claude Code, must choose if they want their conversations used to train future AI models. The company has set a firm deadline: September 28, 2025.

If you don’t take action, your chats and interactions will be automatically used for AI training and stored for up to five years. That’s a major shift from Anthropic’s earlier stance, where user conversations were deleted after 30 days, unless flagged for policy violations.

Anthropic says it will train its AI models on user data, including new chat transcripts and coding sessions, unless users opt out by September 28

“The updates apply to all of Claude’s consumer subscription tiers, including Claude Free, Pro, and Max, “including when they use… pic.twitter.com/osPS0yIdlR

— Glenn Gabe (@glenngabe) August 28, 2025

This policy does not apply to enterprise-level products, like Claude for Work, Claude for Education, Claude Gov, or API users. These business tools continue to enjoy stronger privacy protection, just like OpenAI’s enterprise services.

So why the change? Anthropic says it’s about giving users more control. But critics say it’s really about getting more high-quality data to improve AI performance.

Anthropic introduced default opt-out data sharing for model training on consumer accounts starting September 28, 2025

– Anthropic will train new models using chats and coding sessions from Free, Pro, and Max accounts when users allow the setting, including when they use Claude… pic.twitter.com/4pBy0a9ixW

— Tibor Blaho (@btibor91) August 28, 2025

New Data Policies Spark Confusion and Concern

Anthropic’s blog post explains that by allowing data sharing, users help “improve model safety” and make Claude better at coding, analysis, and reasoning. But that’s only one side of the story.

Training an advanced AI model requires huge volumes of real user data. By tapping into Claude users’ actual conversations, Anthropic gets exactly what it needs to stay competitive with OpenAI and Google. These conversations help Claude become more natural, accurate, and useful.

FYI: Claude Code update to terms of service. @AnthropicAI will start using our chats to train their models and retaining our data for 5yrs.

Opt out option available though on by default. Starts 9/28/2025 pic.twitter.com/qQY8TUCUYt

— Nolan Makatche (@NolanMakatche) August 28, 2025

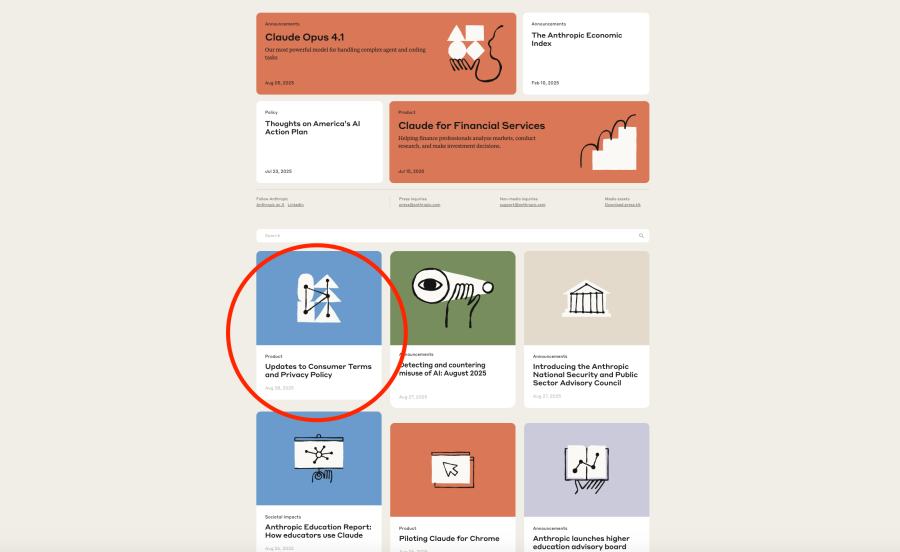

But there’s a problem with how the update is being presented. Existing users see a pop-up called “Updates to Consumer Terms and Policies” with a large “Accept” button. Below it, in much smaller print, is a toggle switch that allows users to opt out of training. The switch is on by default.

This design has raised serious concerns. It’s easy to miss the toggle and click “Accept,” unknowingly agreeing to share your data. The Verge and other tech outlets have pointed out how this design mimics “dark patterns”, tactics that guide users toward actions they might not fully understand.

Anthropic (Claude AI) is changing terms to allow your chats and coding sessions to be used for training their models and stored for up to 5 years — by default. Users who don’t want training on their data must opt out. Check your settings before Sept 28. pic.twitter.com/kl64jh4hXv

— Lukasz Olejnik (@lukOlejnik) August 28, 2025

Privacy experts say these updates create confusion. AI companies often make sweeping changes with little warning. Most users don’t notice when policies are updated. Some think they’ve deleted their chats, but the data might still exist and be used behind the scenes.

And this is part of a bigger trend. Over at OpenAI, the company is facing legal pressure to store all ChatGPT conversations, even those that users thought were deleted. The case, brought by The New York Times and other publishers, shows just how messy AI and data privacy have become.

Source: Anthropic

What You Can Do Right Now to Protect Your Data

With these new updates in place, the most important thing users can do is take action before the opt-out deadline. Anthropic is making it your responsibility to decide if your data will be used to train its AI. Here’s how you can stay in control:

1. Review your settings immediately

If you’re an existing Claude user, log in and look for the policy update pop-up. Beneath the “Accept” button, you’ll find the training toggle. Switch it off if you don’t want your chats used.

2. Double-check your consent

Even if you’ve already clicked “Accept,” go back to your account settings. Anthropic may allow you to change your data-sharing preference after the fact. Look under privacy or data training options.

3. Understand what you’re agreeing to

By default, your data will be stored for five years. That’s a long time. If you don’t read the fine print, your conversations, whether personal or professional, could end up as training material for AI.

4. Consider using business tools

If you need stronger privacy, look into Claude for Work or API access, where data is not used for training. These products are designed for companies and come with clearer data controls.

5. Stay informed

The AI landscape is changing fast. Companies can, and often do, update their terms with little notice. Bookmark the official Claude blog or follow updates from trusted tech news sites so you don’t miss critical changes.

Remember, AI companies are continually striving to improve, and your data plays a significant role in that effort. Being aware of your rights is your best defense.

The Bigger Picture in AI and User Consent

Anthropic’s shift reflects a larger industry trend: companies collecting more user data under the banner of innovation. But this comes at the cost of clarity and consent. More users are now left confused about what happens to their chats and whether they ever had a real choice.

The Biden administration’s Federal Trade Commission has previously warned that AI companies could face enforcement for misleading users or changing terms without clear notification. But with only three of five commissioners currently active, oversight is limited.

That means it’s up to users to stay alert. Tools like Claude are becoming deeply embedded in daily workflows, from customer service to coding to education. These tools may be free or low-cost, but you’re paying with your data.

The line between helpful AI and surveillance tool is becoming increasingly blurred. When data is the fuel, users become the product.