Key Points

- GPT-5-Codex adjusts thinking time up to 7 hours

- Beats GPT-5 on top agentic coding benchmarks

- Outperforms in code reviews and large refactoring tasks

- Available now on ChatGPT Plus, Pro, and Enterprise

OpenAI has released GPT-5-Codex, a major leap forward in AI-powered coding. The model, launched this week, brings dynamic “thinking time” to programming tasks, and it’s a game-changer.

Instead of using a fixed amount of time for each task, GPT-5-Codex decides in real time how long to work, based on the difficulty of the job. It can spend anywhere from a few seconds to a staggering seven hours on one task.

That kind of flexibility leads to better results across the board. Whether it’s debugging, refactoring, or writing fresh code, GPT-5-Codex performs smarter and deeper than any of its predecessors.

We’re releasing GPT-5-Codex — a version of GPT-5 further optimized for agentic coding in Codex.

Available in the Codex CLI, IDE Extension, web, mobile, and for code reviews in Github. https://t.co/OVGrUovgHN

— OpenAI (@OpenAI) September 15, 2025

The update is part of OpenAI’s Codex toolset and is already rolling out to ChatGPT Plus, Pro, Business, Edu, and Enterprise users. Codex is available through the ChatGPT interface, IDEs, terminal access, and GitHub. API access is expected to follow soon.

The launch comes at a time when competition in AI-assisted coding is heating up. With tools like Claude Code, Cursor by Anysphere, and Microsoft’s GitHub Copilot gaining traction, OpenAI is clearly signaling its intent to lead, and GPT-5-Codex may be its strongest move yet.

OpenAI has been making bold moves this year, including its $300 billion deal with Oracle Cloud for model training at scale (read more here).

Source : OpenAI – Techtokens

GPT-5-Codex Crushes Benchmarks and Code Reviews

One of the main upgrades in GPT-5-Codex is its ability to scale its own thinking in real time. Previous models used a routing system, like the one seen in GPT-5, which directs tasks to different engines. But GPT-5-Codex skips the router.

OpenAI just dropped GPT-5-Codex, a new version of GPT5 which is optimised for coding

Wow, that’s a huge improvement for code refactoring tasks pic.twitter.com/9NY784ttjj

— Melvin Vivas (@donvito) September 16, 2025

Instead, it adjusts its own computing effort mid-task — deciding on the fly whether to keep going. In some tests, the model chose to spend more than seven hours solving complex code challenges.

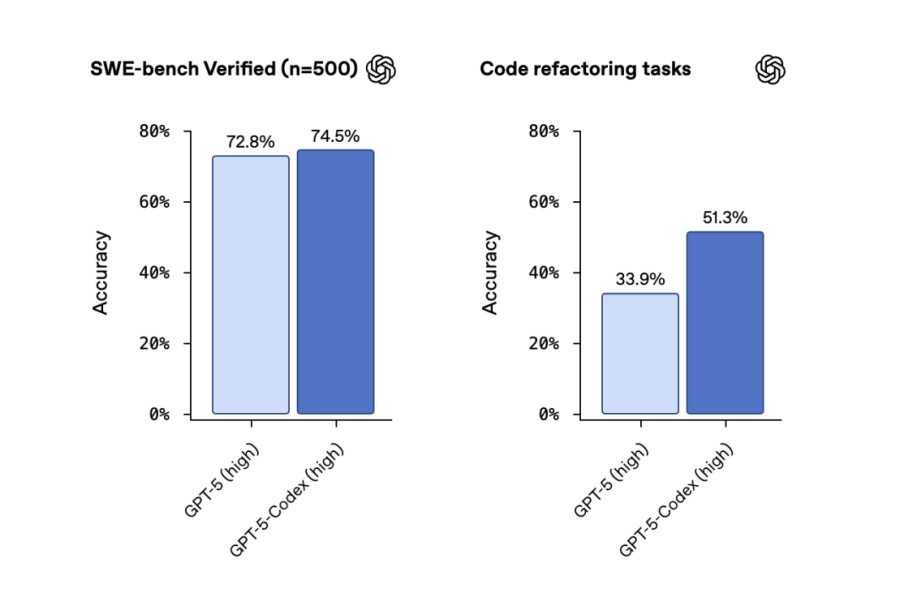

That flexibility helped GPT-5-Codex top key agentic coding benchmarks, including SWE-bench Verified, which evaluates how well a model performs coding tasks in realistic settings.

the new gpt-5-codex is soooo much better (and faster) than normal gpt-5 in codex. great job @sama and @OpenAI team! pic.twitter.com/g9zSQhgSpY

— thegraxisreal (@thegraxisreal) September 16, 2025

The model also excelled in code refactoring tests across large, established repositories, something that enterprise developers deal with daily.

But coding is only part of the story.

OpenAI trained GPT-5-Codex to do code reviews, and not just basic ones. The model was tested against feedback from experienced software engineers. The results were clear:

-

It provided fewer incorrect comments

-

It made more high-impact suggestions

-

It offered detailed feedback on real-world codebases

This capability reflects OpenAI’s broader focus on understanding and managing AI outputs, aligning with efforts from their Model Behavior Team (details here) to ensure output reliability.

These capabilities make GPT-5-Codex a serious assistant for professional development teams, helping improve code quality without adding extra engineering hours.

OpenAI released upgrades to Codex, introducing GPT-5-Codex – a version of GPT-5 further optimized for agentic coding in Codex

– GPT-5-Codex can work independently for more than 7 hours at a time on large, complex tasks and shows 74.5% accuracy on SWE-bench Verified (now report… pic.twitter.com/iYnGJYgFxz

— Tibor Blaho (@btibor91) September 15, 2025

Why GPT-5-Codex Is a Big Win for Developers

AI coding tools are evolving fast, and GPT-5-Codex is setting a new standard. Developers no longer need to accept one-size-fits-all AI helpers. Instead, they now have a model that thinks more like a human: deciding when to slow down, go deeper, and double-check its work.

This means better output, especially in high-stakes environments like enterprise software development. For developers working on large projects or legacy code, GPT-5-Codex offers meaningful support — not just quick answers.

It also gives OpenAI an edge in a market that’s grown crowded. In early 2025, Cursor hit $500 million in annual recurring revenue, showing massive demand for smart, dev-friendly AI. Meanwhile, Windsurf, another popular code editor, was caught in a messy acquisition battle between Google and Cognition.

Other tech giants are also racing to catch up. Google recently expanded its AI model deployment across key services (read more), and regulatory scrutiny is rising. In one major case, China slammed Nvidia with an antitrust violation over a $7 billion deal (see report).

Even OpenAI CEO Sam Altman has been at the center of attention for testing social media bots at scale, a move that’s raising eyebrows across tech communities (more on that).

Against that backdrop, GPT-5-Codex isn’t just another update, it’s a power move. It blends the raw strength of GPT-5 with tools designed for real development workflows.

From writing code to reviewing it, from simple tasks to 7-hour challenges, this model proves that AI coding assistants can be both flexible and reliable.

Even better, it’s already live for thousands of users, and it’s only the beginning. OpenAI has made clear that API access is coming, which means even more developers will soon get to test GPT-5-Codex in their own stacks.